Hardware Utilization / Usage Estimation with Deep Learning

Open as MSc or BSc thesis topic

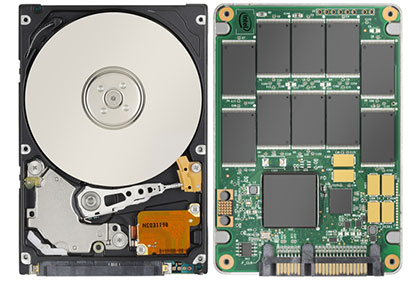

Today, there are many compute- and memory-hungry data-intensive workloads from big data analytics applications to deep learning. These workloads increasingly run on shared hardware resources, which requires building hardware resource managers that can both serve the needs of workloads and utilize hardware well. Predicting the resource utilization of applications can aid such resource managers while making scheduling decisions for those applications. In this project, we aim to investigate the effectiveness of different deep learning techniques for predicting hardware resource needs of applications.

The project has two steps. Thus, it is most suitable for an MSc thesis at ITU. First step is to survey and determine the application features and learning techniques that are most relevant for this goal. As applications, we also target deep learning training in this phase. The second step is to quantify the efficiency and effectiveness of the techniques and generalize to different types of applications / workloads.

Contact